Exploring The Depths Of Artificial Neural Networks: A Look Through The Lens Of ANN

Have you ever stopped to consider how machines learn, how they make sense of the world, or even how they manage to predict complex patterns? It's a fascinating area, you know, and at the heart of much of this lies something called Artificial Neural Networks, or ANN for short. In a way, these remarkable systems are a bit like our own brains, trying to process information and find connections where, perhaps, we might not immediately see them. Today, we're going to pull back the curtain on this powerful technology, looking at its core ideas and how it shapes so much of what we experience in our increasingly connected lives.

So, what exactly is an ANN? Well, essentially, it's a computational model that draws inspiration from the biological neural networks found in animal brains. It's designed, in some respects, to approximate functions or estimate values, kind of like a super-smart detective piecing together clues. You might be surprised to learn just how much of our modern tech relies on these structures, from recognizing faces on your phone to helping doctors make diagnoses. It's really quite something, how these digital "neurons" work together.

This exploration will take us through the fundamental makeup of these networks, touching on their various forms and how they interact with other cutting-edge concepts. We'll also consider, perhaps, the broader impact of such technologies and the bright minds who contribute to their ongoing development. It's a pretty exciting time to be thinking about these things, you know, as the possibilities just seem to keep expanding.

- Tahj Mowry Wife

- Frank Fritz Mike Wolfe House

- Tisha Campbell Son

- How Much Do Big Brother Jury Members Get Paid

- Geffri Maya Snowfall

Table of Contents

- ANN: A Closer Look at Artificial Neural Networks

- The Building Blocks of ANN: Layers and Connections

- ANN and SNN: A Complementary Relationship

- Applications and Impact: Where ANNs Shine

- The Human Element Behind ANN's Progress

- ANN in Academia and Research

- Frequently Asked Questions About ANN

- Conclusion

ANN: A Closer Look at Artificial Neural Networks

When we talk about "ann-lorraine carlsen nantz," it's interesting to note that the information provided doesn't give us personal details about a specific individual with that name. Instead, the text primarily refers to "ANN" in the context of Artificial Neural Networks. So, it seems, our journey today will be about this powerful computational concept. Artificial Neural Networks, or ANNs, are, in a way, at the very core of what many call "machine learning." They're designed to mimic the way our own brains process information, learning from data to recognize patterns, make predictions, or classify things. It's a pretty big deal, really, how much they've changed what computers can do.

These networks are often called "neural networks" because their structure is, you know, loosely inspired by the biological neurons and synapses in our brains. They consist of layers of interconnected nodes, or "neurons," that process information. Each connection has a "weight" that adjusts as the network learns, allowing it to improve its performance over time. It's a rather clever system, when you think about it, allowing machines to "think" in a very specific, data-driven way.

The whole idea behind ANNs is to approximate or estimate complex functions. Think of it like this: if you have a lot of data, and you want to find a hidden rule or pattern within that data, an ANN can often figure it out. This capability is what makes them so versatile and, honestly, quite revolutionary in so many fields today. They're a really fundamental piece of the puzzle, you know, in the larger picture of artificial intelligence.

- Courtney Nikkiah Haywood

- Roland Story Football Coach Fired

- Daniel Craig First Wife

- How Tall Was John Denver

- Mike Wolfe Legal Issues

The Building Blocks of ANN: Layers and Connections

To really get a feel for how ANNs operate, it helps to look at their basic structure. They're not just one big blob of code; they're actually built from distinct layers, each with a specific job. This layered approach is pretty crucial to their success, allowing them to handle complex tasks by breaking them down into smaller, more manageable steps. It's a bit like building a very intricate machine, you know, piece by piece.

Fully Connected (Feedforward) Networks

One of the most common types of ANNs is what's known as a "fully connected" or "feedforward" network. The name, you know, pretty much tells you what it is: every neuron in one layer is connected to every neuron in the next layer. There are no connections going backwards, which is why they're called "feedforward." Information simply moves in one direction, from the input layer, through one or more "hidden" layers, and finally to the output layer. It's a straightforward design, but incredibly powerful.

The text mentions "FC" and "Linear" as having the same meaning in this context. In a way, a "Linear" layer often refers to a fully connected layer where each neuron performs a linear transformation on its inputs. This means it takes the weighted sum of its inputs and adds a bias. These layers are fundamental because they allow the network to learn complex relationships between data points. They're the workhorses, you know, doing a lot of the heavy lifting in terms of processing information.

Multilayer Perceptrons (MLP)

Building on the idea of fully connected networks, we come to Multilayer Perceptrons, or MLPs. The provided text tells us that "single-layer perceptron is called perceptron, multilayer perceptron (mlp) ≈ artificial neural network (ann)." This is a really important distinction, actually. A single perceptron is quite basic, only capable of solving linearly separable problems. But, you know, when you stack multiple perceptrons together, creating multiple layers, you get an MLP, which is far more capable.

So, how many layers make an MLP "multi-layer"? The text suggests that "generally, 1-2 hidden layers can be called multi-layer, accurately called (shallow) neural networks (Shallow Neural Networks)." This means that even a relatively simple network with just one or two hidden layers can be considered a multi-layer perceptron, and thus, a type of ANN. These hidden layers are where the magic really happens, allowing the network to learn increasingly abstract and complex features from the input data. They're pretty vital, you know, for the network's ability to learn intricate patterns.

ANN and SNN: A Complementary Relationship

Our text brings up an interesting point: "ANN and SNN can be complementary." SNNs, or Spiking Neural Networks, are another type of neural network, but they work quite differently from traditional ANNs. While ANNs typically process information continuously, SNNs communicate using discrete "spikes" of information, more like biological neurons. It's a rather different approach, you know, to how information is handled.

The text suggests that ANNs and SNNs might complement each other. ANNs, as mentioned, are "information-rich, and feature information is basically not lost." This means they're very good at preserving all the nuanced details in the data they process. On the other hand, SNNs are often praised for their energy efficiency and their ability to handle temporal data more naturally. So, you know, combining their strengths could lead to even more powerful systems.

There's also a mention of how "linear layers in ANN, for example, convolution, average pooling, BN layers, etc., are mapped to synaptic layers in SNN. Nonlinear layers in ANN, for example, activation function ReLU…" This implies that researchers are exploring ways to translate concepts and structures between these two types of networks. It's a pretty active area of research, actually, trying to bridge the gap and leverage the best of both worlds. The idea is that, perhaps, we can get the best of both styles of processing, which is a very exciting prospect.

Applications and Impact: Where ANNs Shine

ANNs are, you know, incredibly versatile, finding their way into countless applications across various fields. The provided text gives us a glimpse into some of these uses, highlighting their ability to tackle complex problems. They're pretty much everywhere, if you stop to think about it, quietly working behind the scenes.

One specific example mentioned is predicting stock prices: "why do I use lstm, svm, ann to predict stock prices, and the effect is very good?" This points to ANNs, alongside other machine learning models like LSTM (Long Short-Term Memory networks, which are a type of recurrent neural network) and SVM (Support Vector Machines), being used in financial forecasting. The ability of ANNs to learn from historical data and identify subtle patterns makes them, you know, very valuable tools for trying to anticipate market movements. It's a challenging problem, to be honest, but ANNs offer a powerful approach.

Beyond finance, ANNs are fundamental to areas like image recognition, natural language processing, medical diagnosis, and even, you know, in creative fields like generating art or music. The text also mentions "Anno 1800," a city-building game. While not directly about ANNs, it brings to mind the complexity of managing and optimizing systems, which is something ANNs are very good at, albeit in a different context. It's almost like, you know, building a highly efficient digital city, where every piece works together.

The Human Element Behind ANN's Progress

It's easy to get caught up in the technical details of ANNs, but it's really important to remember the people who make it all happen. The text makes a rather striking observation: "ann is so powerful, mainly because the number of people developing ann is more than an order of magnitude higher than that of snn." This highlights a crucial point, actually: the progress of a technology is often directly tied to the collective effort and genius of the people working on it. It's a very human story, when you get right down to it.

Think about it: "With so many talented programmers optimizing ANN, the accuracy naturally gets higher and higher, and the functions become more and more powerful." This isn't just about algorithms; it's about countless hours of research, coding, testing, and refining by dedicated individuals. These "talented programmers" and researchers are constantly pushing the boundaries, finding new ways to make ANNs smarter, faster, and more efficient. It's a pretty incredible collaborative effort, you know, that drives this whole field forward.

Just like how "FinFET defeated SOI," which refers to advancements in semiconductor technology, the dominance of ANN in many areas is a testament to continuous innovation and the sheer volume of human ingenuity poured into its development. It's a bit like, you know, a grand scientific race, where the most robust and optimized solutions tend to prevail, often thanks to the tireless work of brilliant minds. You can learn more about the latest in neural network research to see this in action.

ANN in Academia and Research

The development of ANNs isn't just happening in tech companies; it's deeply rooted in academic research, especially in mathematics and computer science. The provided text gives us a fascinating glimpse into this world, mentioning various prestigious mathematical journals. These publications are, you know, where cutting-edge research is shared and validated.

For many researchers, "the goal of many mathematical researchers is to publish their academic papers on it." Journals like "Ann. Mat. Pura Appl," "Journal d'Analyse Mathématique," "Math Ann," "Crelle Journal," "Compositio," "Adv Math," and "Selecta Math" are mentioned. Publishing in these venues means "the quality of one's paper is guaranteed to a certain extent, and secondly, one can gain a certain status in the academic circle." It's a pretty big achievement, you know, to get your work recognized in these highly respected publications.

The text even categorizes some of these as "mathematics four major journals," implying their top-tier status. This academic rigor is, you know, absolutely vital for the advancement of fields like ANN. It's where the foundational theories are developed, tested, and refined, providing the bedrock for practical applications. Without this deep academic work, the powerful ANNs we see today might not exist, which is a very important point to remember. It's a bit like, you know, building a skyscraper; you need a very solid foundation.

The mention of "Zhihu," a Chinese online question-and-answer community, also highlights the role of knowledge sharing in this field. It's a platform where "people better share knowledge, experience, and insights, and find their own answers." This kind of open discussion and community engagement is, you know, incredibly valuable for researchers and practitioners alike, helping to disseminate ideas and foster new breakthroughs in areas like ANN. It's a pretty dynamic ecosystem, actually, where ideas are constantly exchanged.

Frequently Asked Questions About ANN

What is the main difference between a single-layer perceptron and a Multilayer Perceptron (MLP)?

Basically, a single-layer perceptron is a very simple neural network, capable of solving only problems where data can be separated by a single straight line, you know, like a simple 'yes' or 'no' decision. An MLP, on the other hand, has one or more "hidden" layers between the input and output, which allows it to learn much more complex, non-linear patterns in the data. It's a pretty big leap in capability, actually.

Why are Artificial Neural Networks considered "information-rich"?

ANNs are often described as "information-rich" because they tend to retain a lot of the detail and nuances from the input data as it passes through their layers. They use complex mathematical operations and, you know, a large number of interconnected nodes to process information in a way that aims to preserve essential feature information. This means that, in a way, very little data gets lost in translation as the network learns.

How do academic journals contribute to the development of ANN?

Academic journals, like the ones mentioned in our text, are absolutely crucial for the advancement of ANN and related fields. They serve as platforms where researchers publish their new findings, theories, and experimental results. This process, you know, ensures that research is rigorously reviewed by peers, maintaining high standards of quality and accuracy. It's pretty much how new knowledge gets officially recognized and disseminated, building a collective understanding for everyone involved.

Conclusion

So, we've taken a pretty good look at Artificial Neural Networks, or ANNs, and how they're shaping our world. From their basic structure with fully connected layers and MLPs, to their intriguing relationship with SNNs, these systems are, you know, at the forefront of machine learning. We've seen how they're used in everything from predicting stock prices to the very academic research that pushes their boundaries. It's clear that the collective genius of countless researchers and developers is, in a way, the real driving force behind their incredible progress. The journey of understanding and building smarter systems continues, and ANNs are, you know, very much a central part of that exciting story. To learn more about neural network architectures on our site, and for a deeper dive into specific applications, you might want to link to this page .

- Blake Shelton Salary On The Voice

- Harry Bring Death

- Are Mike Wolf And Danielle Coby Married In American Pickers

- Stephanie Ruhle Nude

- Luis Miguel Brother Sergio

Courtenay Nantz | Furman University

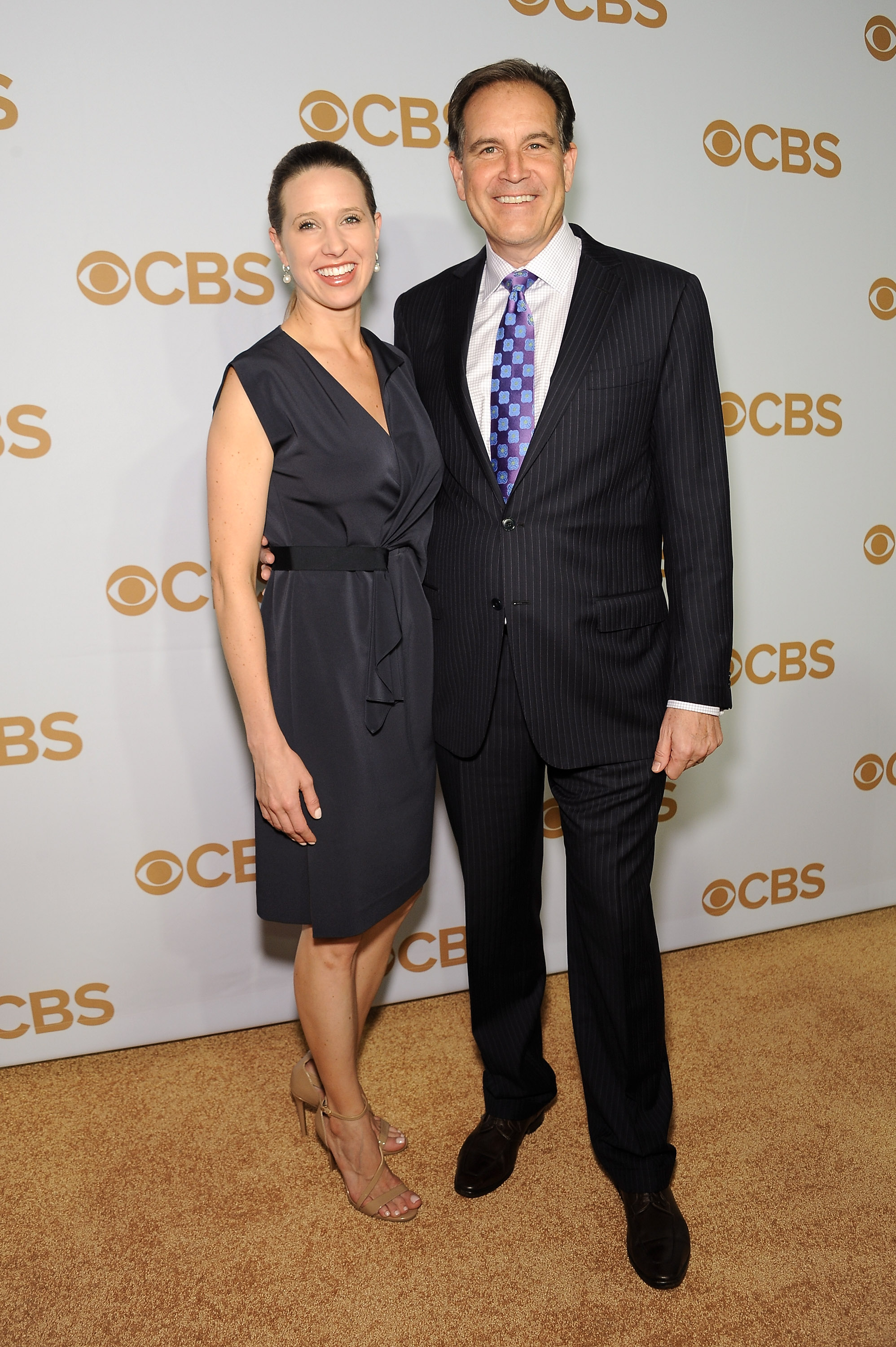

Ann-Lorraine Carlsen Nantz – Everything We Know about Jim Nantz' Ex-wife

Ann-Lorraine Carlsen Nantz – Everything We Know about Jim Nantz' Ex-wife